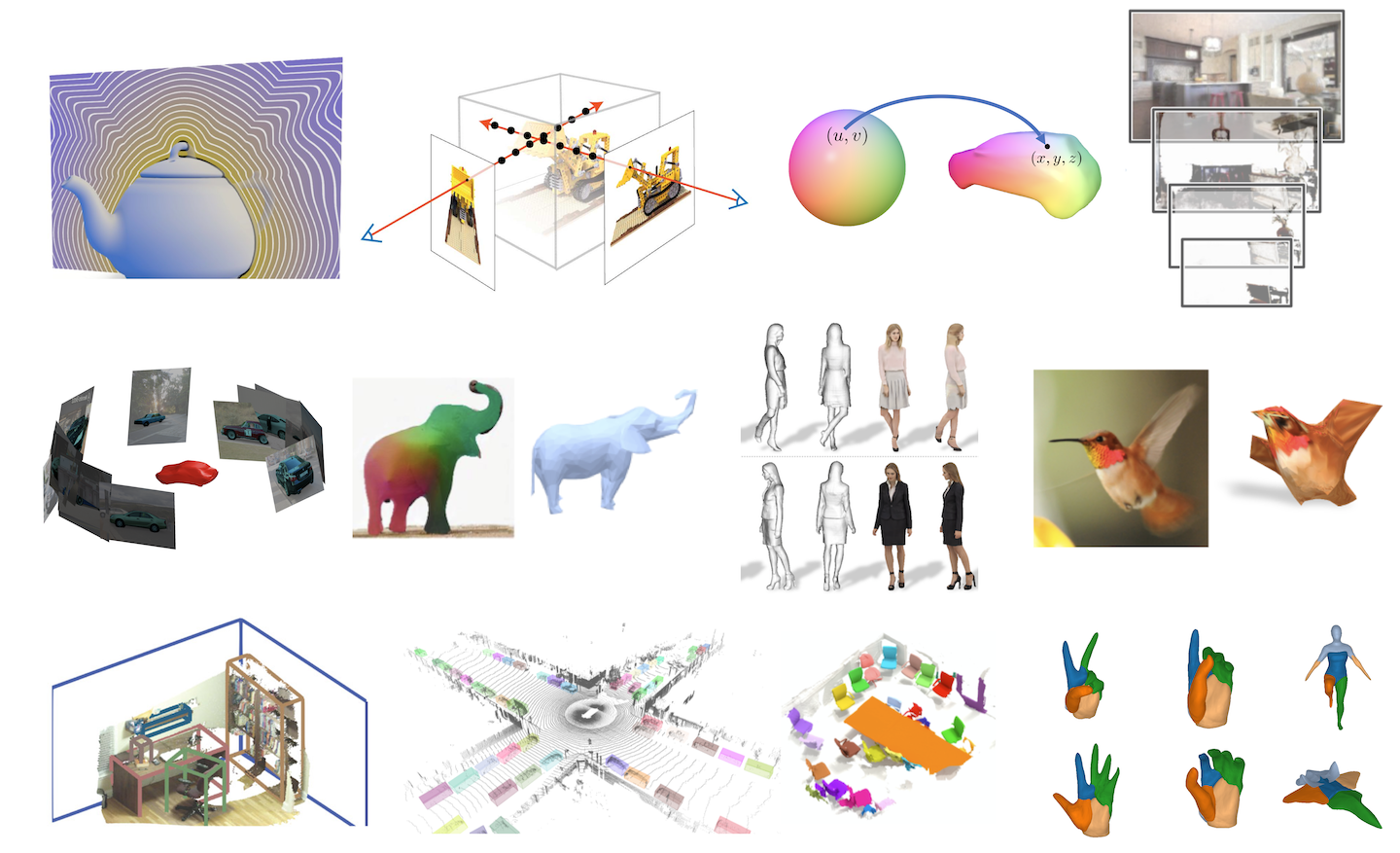

3D Computer Vision

Learning for 3D Vision

Developing autonomous agents requires them to understand and interact within a 3D environment. The capability to deduce, shape, and apply 3D representations is crucial in AI for various applications, including robotic handling, autonomous driving, virtual reality, and photo editing. The ambition to comprehend 3D spaces in computer vision has made significant strides with the advent of advanced (deep) learning methods. This area aims to delve into the integration of 3D Vision with Learning-based approaches, highlighting recent breakthroughs in the field.

It covers topics including:

- Explicit, Implicit, and Neural 3D Representations

- Differentiable Rendering

- Single-view 3D Prediction: Objects, Scenes, and Humans

- Neural Rendering

- Multi-view 3D Inference: Radiance Fields, Multi-plane Images, Implicit Surfaces, etc.

- Generative 3D Models

- Shape Abstraction

- Mesh and Point cloud processing

1. Learning the basics of rendering with PyTorch3D, exploring 3D representations, and practice constructing simple geometry

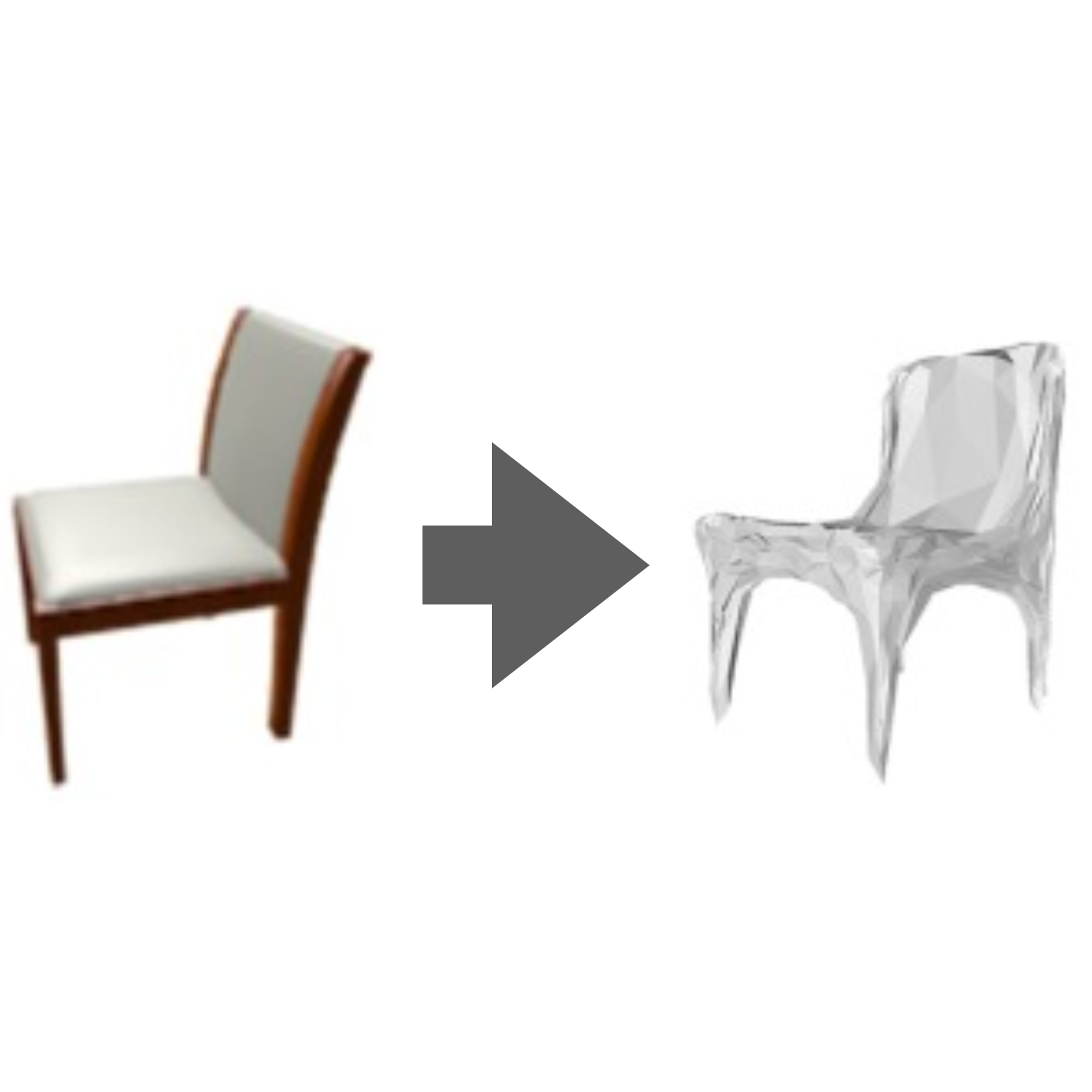

2. Exploring the types of loss and decoder functions for regressing to voxels, point clouds, and mesh representation from single view RGB input

3. Volume Rendering and Neural Radiance Fields

2. Exploring the types of loss and decoder functions for regressing to voxels, point clouds, and mesh representation from single view RGB input

3. Volume Rendering and Neural Radiance Fields

1. Implementing sphere tracing for rendering an SDF

2. Implementing an MLP architecture for a neural SDF, and training this neural SDF on point cloud data

2. Implementing an MLP architecture for a neural SDF, and training this neural SDF on point cloud data

1. Implementing a function for converting SDF into volume density

2. Extending the NeuralSurface class to predict color

2. Extending the NeuralSurface class to predict color

Implementing the Phong reflection model in order to render the SDF volume we trained under different lighting conditions

Implementing a classification model that classifies points clouds from across 3 classes (chairs, vases and lamps objects)

Implementing a Segmentation model that segments points of chair objects into 6 semantic segmentation classes